Kubernetes实践:安装部署

Kubernetes安装

在macos使用minikube安装Kubernetes

curl -Lo minikube http://kubernetes.oss-cn-hangzhou.aliyuncs.com/minikube/releases/v0.25.0/minikube-darwin-amd64 && chmod +x minikube && sudo mv minikube /usr/local/bin/

minikube start –registry-mirror=https://registry.docker-cn.com

minikube start --registry-mirror=https://registry.docker-cn.com

😄 minikube v1.2.0 on darwin (amd64)

✅ using image repository registry.cn-hangzhou.aliyuncs.com/google_containers

💡 Tip: Use 'minikube start -p <name>' to create a new cluster, or 'minikube delete' to delete this one.

🏃 Re-using the currently running virtualbox VM for "minikube" ...

⌛ Waiting for SSH access ...

🐳 Configuring environment for Kubernetes v1.15.0 on Docker 18.09.6

💾 Downloading kubeadm v1.15.0

💾 Downloading kubelet v1.15.0

🔄 Relaunching Kubernetes v1.15.0 using kubeadm ...

⌛ Verifying: apiserver proxy etcd scheduler controller dns

🏄 Done! kubectl is now configured to use "minikube"

restart

minikube start --registry-mirror=https://registry.docker-cn.com

😄 minikube v1.2.0 on darwin (amd64)

✅ using image repository registry.cn-hangzhou.aliyuncs.com/google_containers

💡 Tip: Use 'minikube start -p <name>' to create a new cluster, or 'minikube delete' to delete this one.

🔄 Restarting existing virtualbox VM for "minikube" ...

⌛ Waiting for SSH access ...

🐳 Configuring environment for Kubernetes v1.15.0 on Docker 18.09.6

🔄 Relaunching Kubernetes v1.15.0 using kubeadm ...

⌛ Verifying: apiserver proxy etcd scheduler controller dns

🏄 Done! kubectl is now configured to use "minikube"

how to use

nodes

qwq:api_auto_test rainmc$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

minikube Ready <none> 4m v1.15.0

pods

qwq:api_auto_test rainmc$ kubectl get pods –all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6967fb4995-fnh7r 1/1 Running 1 4m

kube-system coredns-6967fb4995-w4xrl 1/1 Running 1 4m

kube-system etcd-minikube 1/1 Running 0 3m

kube-system kube-addon-manager-minikube 1/1 Running 0 3m

kube-system kube-apiserver-minikube 1/1 Running 0 3m

kube-system kube-controller-manager-minikube 1/1 Running 0 3m

kube-system kube-proxy-vw7mn 1/1 Running 0 4m

kube-system kube-scheduler-minikube 1/1 Running 0 3m

kube-system storage-provisioner 1/1 Running 0 4m

services

qwq:api_auto_test rainmc$ kubectl get svc –all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 9m

kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 9m

kube-system kubernetes-dashboard ClusterIP 10.107.140.81 <none> 80/TCP 3m

fix dashboard

qwq:api_auto_test rainmc$ minikube dashboard

🔌 Enabling dashboard ...

🤔 Verifying dashboard health ...

🚀 Launching proxy ...

🤔 Verifying proxy health ...

💣 http://127.0.0.1:50202/api/v1/namespaces/kube-system/services/http:kubernetes-dashboard:/proxy/ is not responding properly: Temporary Error: unexpected response code: 503

Temporary Error: unexpected response code: 503

create clusterrolebinding add-on-cluster-admin

qwq:kubernetes_practice rainmc$ kubectl create clusterrolebinding add-on-cluster-admin --clusterrole=cluster-admin --serviceaccount=kube-system:default

clusterrolebinding "add-on-cluster-admin" created

qwq:kubernetes_practice rainmc$ git commit -m "minikube dashboard;"

qwq:kubernetes_practice rainmc$ minikube dashboard

🤔 Verifying dashboard health ...

🚀 Launching proxy ...

🤔 Verifying proxy health ...

🎉 Opening http://127.0.0.1:50556/api/v1/namespaces/kube-system/services/http:kubernetes-dashboard:/proxy/ in your default browser...

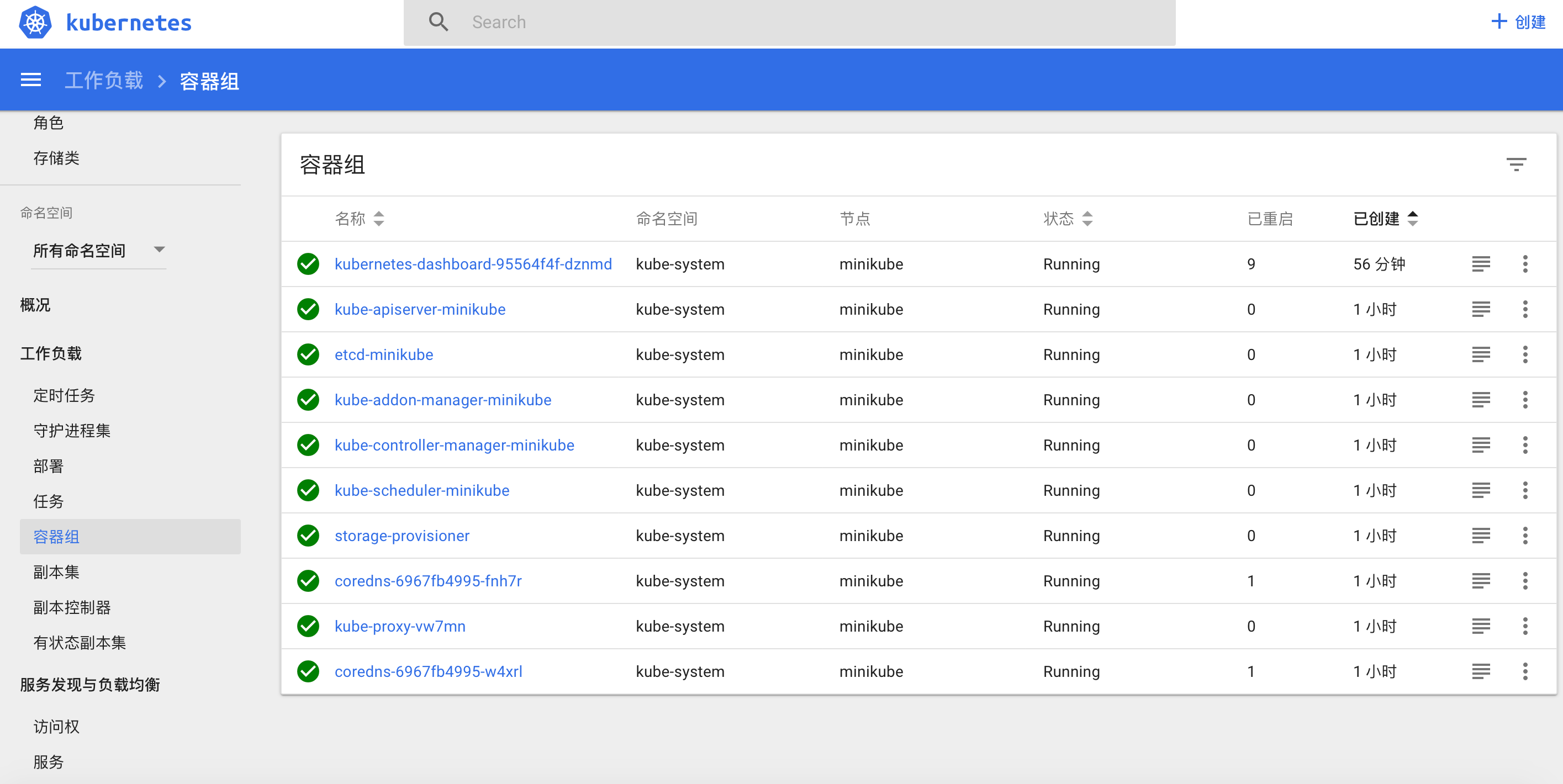

kube-dashboard console

kubeadm

环境

硬件

服务器:

CPU:

网卡:

操作系统

- 操作系统:

cat /etc/redhat-release

[root@host-12-0-0-244 ~]# cat /etc/redhat-release

CentOS Linux release 7.6.1810 (AltArch)

https://isoredirect.centos.org/altarch/7/ isos/aarch64/

- 网络:

● DockerHub(https://hub.docker.com/)网络可达

● Docker下载地址(https://download.docker.com/linux/static/stable/aarch64/)网络可达

● 集群各个节点之间网络可达, flannel插件代码 (https://raw.githubusercontent.com/coreos/ flannel/master/Documentation/kube- flannel.yml)网络可达

网络规划:

| 节点 | IP |

| Master | 12.0.0.243 |

| Node1 | 12.0.0.244 |

| Node2 | 12.0.0.245 |

集群部署

配置部署环境

1.配置华为yum源

[root@qwq-test01 ~]# cat /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.huaweicloud.com/kubernetes/yum/repos/kubernetes-el7-aarch64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=http://mirrors.huaweicloud.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.huaweicloud.com/kubernetes/yum/doc/rpm-package-key.gpg

2.刷新yum缓存

yum makecache

[root@host-12-0-0-244 ~]# yum makecache

已加载插件:fastestmirror, langpacks, product-id, search-disabled-repos, subscription-manager

This system is not registered with an entitlement server. You can use subscription-manager to register.

Loading mirror speeds from cached hostfile

* base: mirrors.huaweicloud.com

* extras: mirrors.huaweicloud.com

* updates: mirrors.huaweicloud.com

base | 3.6 kB 00:00:00

extras | 2.9 kB 00:00:00

kubernetes/signature | 454 B 00:00:00

从 http://mirrors.huaweicloud.com/kubernetes/yum/doc/yum-key.gpg 检索密钥

导入 GPG key 0xA7317B0F:

用户ID : "Google Cloud Packages Automatic Signing Key <gc-team@google.com>"

指纹 : d0bc 747f d8ca f711 7500 d6fa 3746 c208 a731 7b0f

来自 : http://mirrors.huaweicloud.com/kubernetes/yum/doc/yum-key.gpg

是否继续?[y/N]:

导入 GPG key 0xBA07F4FB:

用户ID : "Google Cloud Packages Automatic Signing Key <gc-team@google.com>"

指纹 : 54a6 47f9 048d 5688 d7da 2abe 6a03 0b21 ba07 f4fb

来自 : http://mirrors.huaweicloud.com/kubernetes/yum/doc/yum-key.gpg

是否继续?[y/N]:y

从 http://mirrors.huaweicloud.com/kubernetes/yum/doc/rpm-package-key.gpg 检索密钥

kubernetes/signature | 1.4 kB 00:00:03 !!!

updates | 2.9 kB 00:00:00

(1/9): extras/7/aarch64/filelists_db | 268 kB 00:00:00

(2/9): kubernetes/filelists | 15 kB 00:00:00

(3/9): base/7/aarch64/other_db | 2.1 MB 00:00:00

(4/9): kubernetes/other | 30 kB 00:00:00

(5/9): base/7/aarch64/filelists_db | 6.1 MB 00:00:00

(6/9): updates/7/aarch64/filelists_db | 1.1 MB 00:00:00

(7/9): extras/7/aarch64/other_db | 127 kB 00:00:00

(8/9): updates/7/aarch64/other_db | 248 kB 00:00:00

(9/9): kubernetes/primary | 46 kB 00:00:00

kubernetes 332/332

kubernetes 332/332

kubernetes 332/332

元数据缓存已建立

yum clean all

yum check-update

部署Master

安装基础组件

1.启用NET.BRIDGE.BRIDGE-NF-CALL-IPTABLES内核选项

sysctl -w net.bridge.bridge-nf-call-iptables=1

2.禁用交换分区

swapoff -a

cp -p /etc/fstab /etc/fstab.bak$(date ‘+%Y%m%d%H%M%S’)

sed -i “s/\/dev\/mapper\/centos-swap/#\/dev\/mapper\/centos-swap/g” /etc/fstab

kubelet 在 1.8 版本以后强制要求 swap 必须关闭

为什么要关闭swap?

1.swap的用途?

swap 分区就是交换分区,(windows平台叫虚拟内存)

在物理内存不够用时,操作系统会从物理内存中把部分暂时不被使用的数据转移到交换分区, 从而为当前运行的程序留出足够的物理内存空间

2.为什么要关闭swap?

swap启用后,在使用磁盘空间和内存交换数据时,性能表现会较差,会减慢程序执行的速度,有的软件的设计师不想使用交换,

安装K8s组件

yum install -y kubelet kubeadm kubectl kubernetes-cni

echo “net.bridge.bridge-nf-call-iptables=1” > /etc/sysctl.d/k8s.conf

使能kubelet服务

systemctl enable kubelet

下载基础组件

kubeadm config images list

[root@host-12-0-0-244 ~]# kubeadm config images list

I1111 17:31:44.904991 17549 version.go:96] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://storage.googleapis.com/kubernetes-release/release/stable-1.txt: net/http: request canceled (Client.Timeout exceeded while awaiting headers)

I1111 17:31:44.905319 17549 version.go:97] falling back to the local client version: v1.14.2

k8s.gcr.io/kube-apiserver:v1.14.2

k8s.gcr.io/kube-controller-manager:v1.14.2

k8s.gcr.io/kube-scheduler:v1.14.2

k8s.gcr.io/kube-proxy:v1.14.2

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.3.10

k8s.gcr.io/coredns:1.3.1

下载镜像

docker pull docker.io/mirrorgooglecontainers/kube-apiserver-arm64:v1.14.2

docker pull docker.io/mirrorgooglecontainers/kube-controller-manager-arm64:v1.14.2

docker pull docker.io/mirrorgooglecontainers/kube-scheduler-arm64:v1.14.2

docker pull docker.io/mirrorgooglecontainers/etcd-arm64:3.3.10

docker pull docker.io/mirrorgooglecontainers/kube-proxy-arm64:v1.14.2

docker pull docker.io/mirrorgooglecontainers/pause-arm64:3.1

docker pull docker.io/coredns/coredns:1.3.1

修改已下载的镜像标签。

docker tag docker.io/mirrorgooglecontainers/kube-apiserver-arm64:v1.14.2 k8s.gcr.io/kube-apiserver:v1.14.2

docker tag docker.io/mirrorgooglecontainers/kube-controller-manager-arm64:v1.14.2 k8s.gcr.io/kube-controller-manager:v1.14.2

docker tag docker.io/mirrorgooglecontainers/kube-scheduler-arm64:v1.14.2 k8s.gcr.io/kube-scheduler:v1.14.2

docker tag docker.io/mirrorgooglecontainers/etcd-arm64:3.3.10 k8s.gcr.io/etcd:3.3.10

docker tag docker.io/mirrorgooglecontainers/kube-proxy-arm64:v1.14.2 k8s.gcr.io/kube-proxy:v1.14.2

docker tag docker.io/mirrorgooglecontainers/pause-arm64:3.1 k8s.gcr.io/pause:3.1

docker tag docker.io/coredns/coredns:1.3.1 k8s.gcr.io/coredns:1.3.1

删除旧镜像。

命令如下所示,命令执行结果如图1-4所示。

docker rmi docker.io/mirrorgooglecontainers/kube-apiserver-arm64:v1.14.2

docker rmi docker.io/mirrorgooglecontainers/kube-controller-manager-arm64:v1.14.2

docker rmi docker.io/mirrorgooglecontainers/kube-scheduler-arm64:v1.14.2

docker rmi docker.io/mirrorgooglecontainers/etcd-arm64:3.3.10

docker rmi docker.io/mirrorgooglecontainers/kube-proxy-arm64:v1.14.2

docker rmi docker.io/mirrorgooglecontainers/pause-arm64:3.1

docker rmi docker.io/coredns/coredns:1.3.1

[root@qwq-test01 ~]# kubeadm init --pod-network-cidr=10.244.0.0/16 --kubernetes-version=1.14.2

I1111 16:33:51.644819 23698 version.go:96] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://storage.googleapis.com/kubernetes-release/release/stable-1.txt: net/http: request canceled (Client.Timeout exceeded while awaiting headers)

I1111 16:33:51.645016 23698 version.go:97] falling back to the local client version: v1.14.2

[init] Using Kubernetes version: v1.14.2

[preflight] Running pre-flight checks

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [qwq-test01 localhost] and IPs [12.0.0.243 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [qwq-test01 localhost] and IPs [12.0.0.243 127.0.0.1 ::1]

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [qwq-test01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 12.0.0.243]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 57.598568 seconds

[upload-config] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.14" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --experimental-upload-certs

[mark-control-plane] Marking the node qwq-test01 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node qwq-test01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: cjgsme.q46fct95kwsvf45t

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 12.0.0.243:6443 --token cjgsme.q46fct95kwsvf45t \

--discovery-token-ca-cert-hash sha256:03ce8ad5e62e573a6bb7b0b3a4a4583137dfcc8f77ed54b9c3fc4c502fe7363e

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

get nodes

root@qwq-test04:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

qwq-test04 NotReady master 5m3s v1.19.3

部署Node

安装基础组件

同Master

加入集群

systemctl enable docker.service

kubeadm join 12.0.0.243:6443 –token cjgsme.q46fct95kwsvf45t \ –discovery-token-ca-cert-hash sha256:03ce8ad5e62e573a6bb7b0b3a4a4583137dfcc8f77ed54b9c3fc4c502fe7363e

kubeadm join 12.0.0.243:6443 --token cjgsme.q46fct95kwsvf45t \

> --discovery-token-ca-cert-hash sha256:03ce8ad5e62e573a6bb7b0b3a4a4583137dfcc8f77ed54b9c3fc4c502fe7363e

[preflight] Running pre-flight checks

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

安装网络插件

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@qwq-test01 ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.13.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.13.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

get nodes

[root@qwq-test01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

qwq-test01 Ready master 3h31m v1.14.2

qwq-test02 Ready <none> 146m v1.14.2

qwq-test03 Ready <none> 14m v1.14.2

卸载

[root@qwq-test01 ~]# kubeadm reset

[reset] Reading configuration from the cluster...

[reset] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

[reset] Removing info for node "qwq-test01" from the ConfigMap "kubeadm-config" in the "kube-system" Namespace

W1123 19:31:59.892367 28355 reset.go:158] [reset] failed to remove etcd member: error syncing endpoints with etc: etcdclient: no available endpoints

.Please manually remove this etcd member using etcdctl

[reset] Stopping the kubelet service

[reset] unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of stateful directories: [/var/lib/etcd /var/lib/kubelet /etc/cni/net.d /var/lib/dockershim /var/run/kubernetes]

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually.

For example:

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

部署应用

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

spec:

replicas: 1

selector:

matchLabels:

app: nginx-app

template:

metadata:

labels:

app: nginx-app

spec:

containers:

- name: nginx-app

image: nginx

ports:

- containerPort: 80

hostPort: 8001

resources:

requests:

cpu: 1

memory: 500Mi

limits:

cpu: 2

memory: 1024Mi

kubectl apply -f deployment.yaml

root@qwq-test04:~# kubectl apply -f nginx_deployment.yaml

deployment.apps/nginx-deploy created

get pods

root@qwq-test04:~# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default nginx-deploy-78cf5fb487-rf7ld 1/1 Running 0 95s

其他部署工具

ansible playbook:

https://github.com/gjmzj/kubeasz

初学者可以跟着一步步看原理,后期还可以自己定制化

https://github.com/kubernetes-incubator/kubespray

k8s实验平台 https://console.magicsandbox.com

docker和Kubernetes版本

docker version

Client:

Version: 17.06.2-ce

API version: 1.30

Go version: go1.8.3

Git commit: cec0b72

Built: Tue Sep 5 20:12:06 2017

OS/Arch: darwin/amd64

Server:

Version: 17.06.2-ce

API version: 1.30 (minimum version 1.12)

Go version: go1.8.3

Git commit: cec0b72

Built: Tue Sep 5 19:59:19 2017

OS/Arch: linux/amd64

Experimental: true

Kubernetes 1.9 <–Docker 1.11.2 to 1.13.1 and 17.03.x

Kubernetes 1.8 <–Docker 1.11.2 to 1.13.1 and 17.03.x

Kubernetes 1.7 <–Docker 1.10.3, 1.11.2, 1.12.6

Kubernetes 1.6 <–Docker 1.10.3, 1.11.2, 1.12.6

Kubernetes 1.5 <–Docker 1.10.3, 1.11.2, 1.12.3

链接:

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.5.md#external-dependency-version-information

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.5.md#external-dependency-version-information

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.6.md#external-dependency-version-information

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.7.md#external-dependency-version-information

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.8.md#external-dependencies

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.9.md#external-dependencies

SerciceStage

概述

华为云ServiceStage微服务云应用平台,提供一站式企业级微服务应用管理,能够适应企业应用的复杂环境,帮助企业平滑改造上云。 同时,ServiceStage的微服务全生命周期管理,帮助企业降低云上应用部署、频繁升级、运行管理和运维复杂性。

开放、灵活的资源调度框架,基于Kubernetes容器编排平台,支持资源混合编排,让应用平滑上云。 开放的微服务框架,支持业务低成本微服务化改造; 丰富的微服务治理能力,保障分布式云环境下业务高可靠运行。 一站式微服务应用生命周期管理,部署、启动、停止、升级、回滚、删除等。海量微服务调用链跟踪、应用级监控、故障告警、日志分析,支持分布式环境问题快速定界定位。

节点纳管

2017-01-15

paas-om-core安装

2017-01-15

refs

https://yq.aliyun.com/articles/626118

https://github.com/coreos/flannel/edit/master/Documentation/kube-flannel.yml

https://blog.csdn.net/ajksdhajk/article/details/84770458

https://www.cnblogs.com/zhongyuanzhao000/p/11401031.html

https://jhooq.com/kubernetes-error-execution-phase-preflight-preflight/

2020.11.11更新